Tech Secrets: Top 3 Quantum Computing Breakthroughs That Change Everything

For decades, Quantum Computing Science has been fiction – a tantric promise of unthinkable power in a distant future is limited to sterile laboratory experiments and theoretical physics letters. The story has always been, “it comes, but it’s 20 years away.” Well, it’s a quiet, seismic game. The future is no longer on the horizon; It takes the form of research laboratories today.

How long does the conversation go from, if, and more importantly, how? We look at a series of successes that are not only step -by -step improvements, but are basic leaps, which solve many problems that hold quantum tech. These are secrets that are not yet understood by the mainstream, the fundamental changes that change everything. This article draws the curtain back over three such successes that inspire quantum computer machines in a practical, world-transforming strength from a theoretical miracle.

Table of Contents

Breakthrough #1: The Error Correction Revolution

Quantum calculation lifts in the heart – and its biggest challenge – lives Qbit. Unlike a classical bit, which is certainly a 0 or 1, a qubit is present in a complete superposition, both 0 and 1 are together. This is, combined with complications (a “scary” connection between Qubits), which gives the quantum computer a supplement to its unique capacity. However, this delicate quantity condition is incredibly delicate. Slightly environmental eye – a vagabond photon, a vibration, or a temperature change – can cause, reduce, quit, collapse, and introduce errors.

For years, this fragility meant that tech quantum computer machines could only make simple calculations before they became unreliable. A fault-tolerant quantum computer pane, a complex, useful algorithm, required quantum error correction (QEC). The principle was simple: a simple, very reliable “logical” Qbit to create more incredible “physical” Qbit -Packages at the same time. However, practice was a monumental technique barrier.

1. The Secret Unveiled

Success happened when tech researchers, especially in companies such as Google and IBM, and in institutions such as Harvard and Kutch, finally demonstrated that QEC could not only be implemented, but it could actually take over the wrong speed. In a landmark 2023 experiment, Google Quantum AI “Quantum Domination” demonstrated an error improvement. His most important innovation was a system where adding more physical quits to a logically ended reduced error rate, a clear indication that QEC scaling laws were working in practice.

This was not just a little improvement; It was basic evidence of the concept. Think about building the first transistor after decades of working with vacuum tubes. It was proven that the primary obstacle to scalable quantum calculation was not the law of physics, but an engineering problem – and one that is now a clear, viable solution.

2. Why This Changes Everything

This breakthrough is the bedrock upon which the entire quantum destiny could be built. Without sturdy blunders correction, quantum computers might remain curiosities. With it, the door is open to:

1. Drug Discovery and Materials Science: Simulating complicated molecules for life-saving pharmaceuticals or next-generation batteries calls for sustained, blunders-free calculation that is not possible for classical computers.

2. Climate Change Solutions: Modeling new catalysts for carbon capture or designing more efficient solar cells are troubled with such significant variables that even a fault-tolerant quantum computer could tackle them correctly.

3. Unbreakable Encryption (and the Need for New Standards): While a strong quantum pc may want to wreck modern-day encryption, QEC is the critical step that makes this a tangible future reality, forcing the world to adopt quantum-resistant cryptography.

The Error Correction Revolution means we are not simply building qubits; we’re constructing a strong structure for them to carry out meaningful computations.

Breakthrough #2: The Rise of Modular Quantum Computers

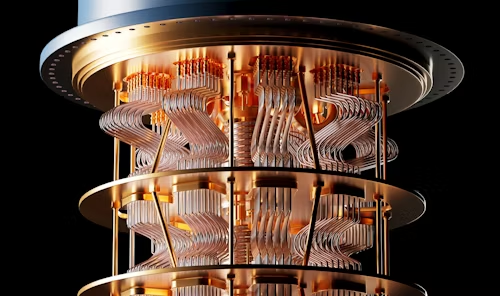

The prevailing image of a tech quantum computer is a simple, outrageously complex machine- a chandelier of wires that goes down into a single quantum processor in a super-cooled, weakened refrigerator. However, this unbroken approach hits a hard ceiling. How do you add millions of qubits to a single, infinitely cold environment? The challenges of design are inaccessible.

The other great success is a paradigm change in design: Modular Quantum Computing. Instead of building a huge processor, the new approach is to create a network of small, more stable quantum processors and connect them continuously. It is the same principle that transformed classical data processing into a network of millions of small, powerful PCs, by the construction of a mainframe of room size.

1. The Secret Unveiled: Quantum Links and the “Quantum Data Center”

Important tech that enables this change is the development of reliable quantum compounds. Scientists have made surprisingly advanced advances in using photons (light particles) to make quantum connections between different quantum tiles. In 2022, a team at Kutik in the Netherlands performed a quantum module with several chips, where quibits on different pieces can be complicated through quantum communication links.

This is the same as creating a quantum version of the USB or Ethernet cable, but with a delicate quantity status. This allows individual quantum modules to work together as a single, large, powerful computer for a specific feature, each performing a specific task. Companies such as IBM and Atom Computing are now putting public road maps in the long run on play on this modular architecture.

2. Why This Changes Everything

The scalability of the modularity solves the problem elegantly and practically.

1. Scalability: Network 100 modules of 1000 queries are infinitely easier than creating a single 100,000 quality processor. It removes primary physical bottlenecks to make a large-scale quantum computer.

2. Specialization: Different modules can be designed for different purposes. A module can be adapted to error correction, for another specific calculation, or many continuous tasks, as a classic data center uses CPU, GPU, and TPU in harmony.

3. Strength and maintenance: If a module fails, it can be insulated and repaired without bringing the entire system down, one gaming chain for practical, commercial distribution.

This modular approach converts quantum calculation into a viable technique platform for laboratory use, which paves the way for tomorrow’s quantum data centers. The scalability of the modularity solves the problem elegantly and practically.

Breakthrough #3: Algorithmic Leaps – Doing More with Less

Even when the hardware is improved, the number of stable, logical Qubits is still a precious resource. The third success is not in the fridge, but in the code. Quantum sales designers receive a monumental achievement: They are looking for ways to run powerful, useful algorithms on machines with less silence than already expected.

For years, the perception was that we would need millions of high-quality ends to do something really useful. He moves around mail dramatically.

1. Secret reveal: “Quantum-centric supercomputers” and hybrid algorithms

Success is the development of refined hybrid algorithms and smart circuit compression techniques. Instead of waiting for a quantity computer to run the whole problem from beginning to end, researchers break problems into small, manageable blocks. A classic supercomputer handles the good parts (data management, some types of calculations), while quantum processors are used as an ultra-powerful accelerator for specific, computational hell parts of the problem.

This “Quantum-Centric Supercomputer” model, which is a word master of IBM, is already producing results. Companies such as BMW and Boeing use these hybrid approaches on today’s noisy scale (NISQ) machines to adapt complex supply chains and simulate airflow on new wing designs. These are not abstract experiments; They are commercial applications running on existing hardware.

2. Why does it change everything?

This algorithm’s simplicity accelerates the quantum time for dramatic practical benefits.

1. Effects of the nearest period: We do not need to wait for fault-tolerant machines to provide quantum technology. The hybrid algorithm will provide commercial and scientific insight over the next 3-5 years, creating a response loop that provides further investment and development.

2. Democratization of access: cloud-based tech quantum calculation, where companies can rent time on these hybrid systems, allows a wide range of industries to be used and innovated without a dedicated computer owner.

3. Breaking the goal: It moves the focus from a pure “end counting” weapons run to a more nuanced measurement of “quantum tools” -CAN We solve the world problems with today’s hardware?

This tech success ensures that the quantum revolution will be a gradual climb, not a single large bang, where the tangible benefits will appear very soon, which will soon appear compared to any prediction.

The Convergence: A New Tech Dawn

Personally, each of these tech successes is a monumental achievement. But his real force lies in his convergence. Stable error improvement makes modular designs possible. Modular design provides a scalable architecture for complex algorithms. And these algorithms extract world values from the entire system.

This virtuous cycle is one that marks the real sharing point. The story of quantum calculation is officially obsolete, to be “decades away”. Basic technical secrets are out. Engineering challenges are solved at a fantastic pace. We are on the verge of a new calculation time, one that will redefine everything from medical and finance to logistics and climate science. Quantum tech Future is not a distant promise; It is made, module of stable module, algorithm at smart algorithm, now. The next big discovery in any field cannot be found in a petri dish or telescope, but in a consistent, tangled position. The race is on.

1. What are the three recent quantum computing breakthroughs?

Recent breakthroughs include: (1) error-corrected logical qubits that maintain stability longer than physical qubits, (2) quantum processors with over 1,000 high-fidelity qubits enabling more complex computations, and (3) room-temperature quantum memory using novel materials like diamond NV centers, reducing reliance on extreme cooling.

2. Why do these breakthroughs matter?

They address core challenges—qubit stability, scalability, and operating conditions—bringing practical, large-scale quantum computers closer to reality. This could revolutionize fields like drug discovery, cryptography, and AI optimization.

3. When will these advances impact everyday technology?

While full-scale quantum computing is still years away, early applications in secure communications, material science, and specialized optimization problems could emerge within 5–10 years.